Hooshang: Social Robot Platform

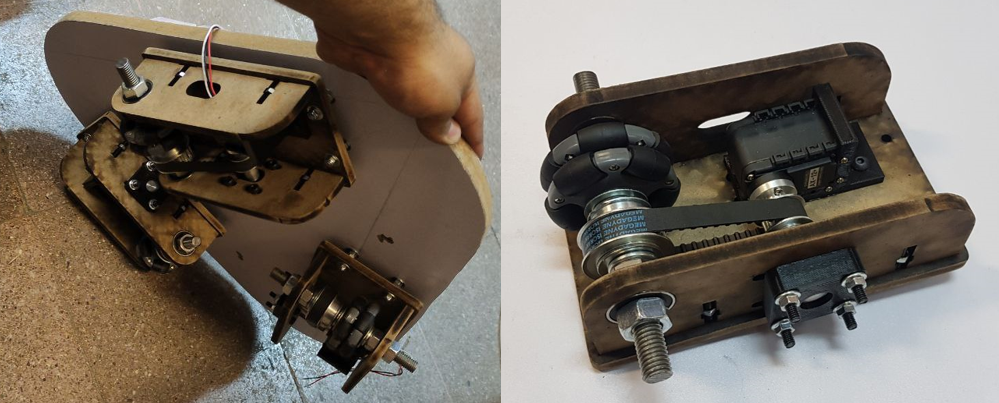

Hooshang 2.0 Movement Module

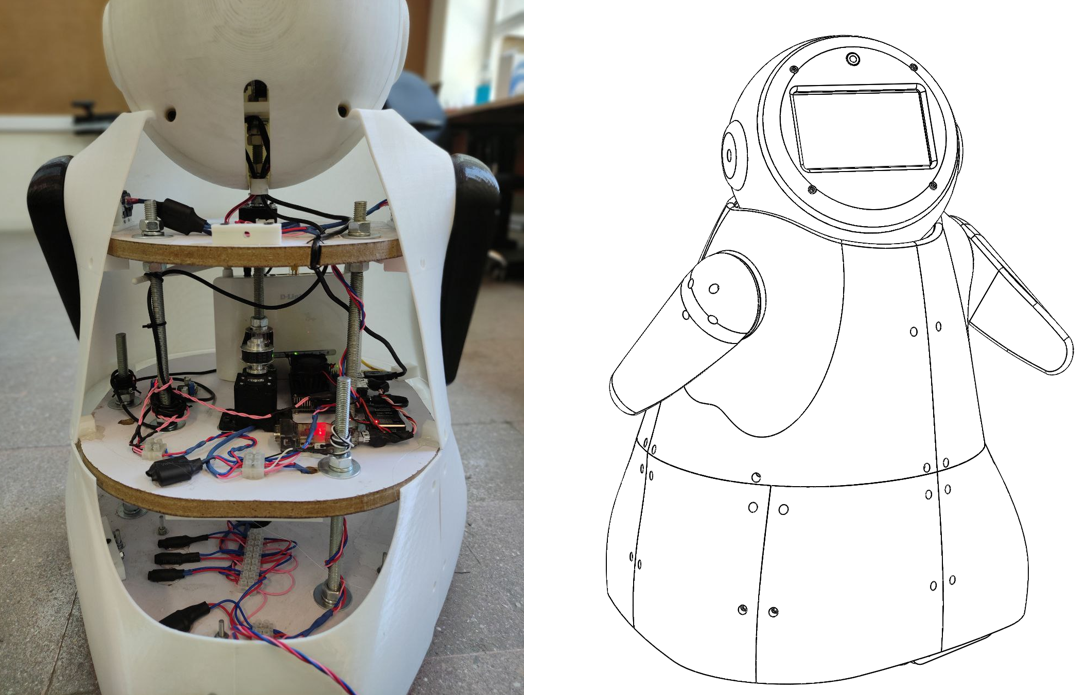

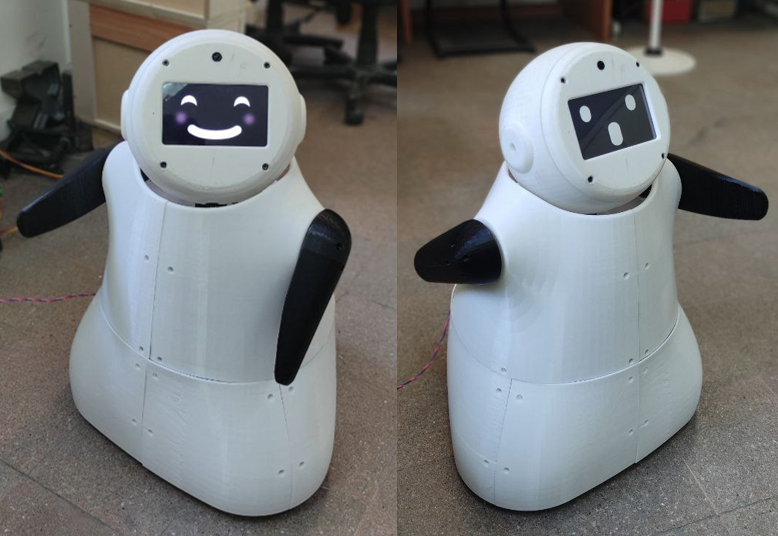

Our previous systems did not have any cognitive module, and their interactions were almost hardcoded on them, but the results and experts’ opinions were promising. Based on what we learned from past experiences, Hooshang 2.0 was born, which had all three required modules. The interaction module is based on an Android tablet that presents the Robot’s face. A fisheye lens camera was provided for a wide-angle view. The Robot’s brain is a Nvidia jetson nano that controls all Dynamixel motors with a USB adapter. The Robot is equipped with a local Wi-Fi router for ease of communication. The movement module is based on a triangular Omni wheel vectoring mechanism for the base motion; the Robot’s hands have one degree of freedom each. Other than that, the head of the Robot has 2 degrees of movement provided by a pan-tilt mechanism.

Hooshang 2.0

The Hooshang series was a unique experience for my team due to its long and progressive timeline requiring multiple developments and redesign stages. The Hooshang 2.0 is now operational in the Advanced Robotics and Intelligent Systems Lab at the University of Tehran, and it will be tested in rehabilitation scenarios for children with ASD. Though the project’s overall cost gradually increased over time, it is still reasonably more affordable than available commercial robots. We hope to open-source all CAD files and source codes soon after publishing our paper.

Hooshang 2.0 Interactions

Hooshang 2.0 Head & Hands Movements

Hooshang 2.0 Base Motion

Developing socially intelligent robots requires tight integration of robotics, computer vision, speech processing, and web technologies. We present the Socially-interactive Robot Software (SROS) platform, an open-source framework that addresses this need through a modular layered architecture. SROS connects the Robot Operating System (ROS) layer responsible for movement with web and Android interface layers using standard messaging and application programming interfaces (APIs). Specialized perceptual and interaction skills are implemented as reusable ROS services that can be deployed on any robot. This enables rapid prototyping of collaborative behaviors by synchronizing perception of the environment with physical actions. We experimentally validated core SROS technologies like computer vision, speech processing, and autocomplete speech using GPT2 - all implemented as plug-and-play ROS services. The abilities validated confirm SROS's effectiveness in developing socially interactive robots through synchronized cross-domain interaction. Demonstrations synchronizing multimodal behaviors on an example platform illustrate how SROS lowers barriers for researchers to advance state-of-the-art in customizable, adaptive human-robot systems through novel applications integrating perception and social skills.

Members: Alireza kargar, Mahta Akhyani, Dr Hadi Moradi, Shahab Nikkhoo, Farzam Masoumi

Collaborating Centers:

Papers:

More...